C.P. Ravikumar, Texas Instruments

How a society treats its disabled is the true measure of a civilization.

In this blog post, I will look at three projects in the area of Assistive Technologies – an emerging area where technological innovations are used to help physically challenged persons. The first project intends to help old patients with possible visual impairment, who may have to take their own medication in the absence of a caregiver. The second project attempts to provide a prosthetic replacement for fingers that may have been lost in an accident. The third project deals with automatic recognition of gestures and the audio playback of information on the detected gestures for visually impaired users.

You will find links to YouTube videos of these project demonstrations in this blog post. These projects will be demonstrated in person at the TI India Educators’ Conference on April 5, 2014. The technical program of TIIEC 2014 is now available at tiiec.in – please take a look!

Take two white pills before your meal

Kshitiz Gupta, Arpit Jain, P. Harsha Vardhan, Sumeet Singh, and Aashish Amber of IIT Guwahati, working with Prof. Amit Sethi, have built an automated medication kit, entitled MedAssist.

“When a doctor prescribes medicines to a patient, there will typically be many guidelines about the time at which the medicine must be taken, the dosage of the medicine, and so on,” explains Kshitiz. “Unfortunately, patients may not adhere to these guidelines due to forgetfulness or other reasons. Our project is to help such patients to take the correct medication as per the doctor’s prescriptions.”

“We target elderly people living in rural areas,” adds Arpit. “So we used a very simple interface for the user device. For instance, a loud sound is used to alert a patient when it is time to take a specific medicine.”

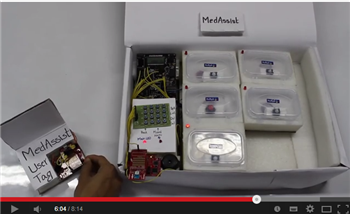

“The project hardware incudes a MedAssist box and a user tag,” says Harsha Vardhan. “The MedAssist box uses a TI MSP430FG4618 microcontroller development kit as the basic building block. The user tag is based on the MSP430 launchpad along with a RF boosterpack (430BOOST-CC110L)”

“The MedAssist box has several compartments, one for each type of medicine. A caregiver must use the keypad interface provided to enter the details of the prescription. An LED and a buzzer are provided on the door of each compartment. The LED and the buzzer corresponding to the appropriate compartment will turn on to attract the attention of the patient. An LCD display will show the required dosage. When the compartment is opened, the LED and the buzzer will be turned off. The buzzer is intended for a patient with visual impairment.”

“Use of RF communication helps in allowing the patient to move about as long as he/she is within the range of wireless communication,” adds Aashish. “The cost of the box can be brought down to around $25, making it affordable.”

You may view the demonstration of the project here.

The Moving Finger – not by Agatha Christie

Mahanth G N, Sachin B C, Shankar Kumar J and Vinay S N of Channabasaveshwara Institute of Technology, Karnataka, worked with Prof. Vijay P Sompur on a project related to design of prosthetic finger replacements using surface EMG signal acquisition.

“The inspiration was a personal life experience,” says Shankar. “One of our schoolmates was physically challenged, and we wanted to help people like him. Several people lose their fingers in accidents or other reasons. The basic idea in our project is to acquire surface EMG signals and use them to provide the functionality of human fingers.”

“EMG is an acronym for Electro Myogram, which is a measure of the electrical activity of the muscles,” explains Mahanth. “It is a signal generated by the brain to command a human locomotory organ such as a finger. If the finger has been severed, the surface EMG signals can still be acquired and a prosthetic finger can be made to move in response to the signal.”

“We used an extensive signal acquisition circuit based on TI’s analog components,” says Sachin. “The surface EMG signals are picked up by Ag/AgCl electrodes. The instrumentation amplifier INA114AP is used to amplify the difference signal between two EMG signals from two points. The output of the amplifier is averaged using a precision fullwave rectifier based on TL082CP. The output of the rectifier is smoothened out using the integrator based on TL082CP. The output of the integrator is amplified using another TL082CP operational amplifier to get an analog signal of sufficient strength.”

“We used the MSP430G2253 MCU from Texas Instruments to process the EMG signal. The built-in 10-bit ADC of the MCU converts the analog signal to a digital value. This value is compared to a nominal value that corresponds to the sedentary state of a finger muscle. If a difference is perceived, it is assumed that the user wishes to move the finger and a Tunigy nanoservo is activated to move the prosthetic finger.”

“We were able to demonstrate the concept successfully, although there is room for further improvement,” says Shankar. “We used Energia software on the MSP430 launchpad and this resulted in a low-cost implementation.”

The project video may be viewed here.

A kind gesture for the visually impaired

Shashank Garg, Rohit Kumar Singh, and Ravi Raj Saxena of Delhi Technological University worked under the mentorship of Prof. Rajiv Kapoor worked on developing a gesture-based user interface for visually impaired persons.

“Sensing of gestures made in the air needs fairly expensive hardware and complex image processing algorithms,” says Shashank. “We were inspired by a project called SoundWave by Microsoft, which uses simple hardware such as a microphone and a speaker.”

“We used the idea of Doppler effect to detect gestures,” says Rohit. “The reflected wave from a hand that is making a gesture is shifted in frequency compared to the pilot tone. This shift in frequency is analyzed to detect a particular gesture.”

“We used a Beagleboard-XM in our project,” adds Ravi. “An inaudible sound wave of 18.892 kHz is given out by the speaker connected to the BeagleBoard. The wave reflected by a moving obstacle is captured using a microphone. Gesture detection algorithms are run on the Beagleboard XM and the information on the detected gesture is displayed on an LCD screen. An MSP430 microcontroller from TI is used to handle the display. We also played back the interpretation of the gesture to help a visually impaired user.”

“We used Simulink for developing the software and then used the target support package for Beagleboard to translate the code into C that can be compiled for Beagleboard,” reveals Shashank.

“We tested our system with three kinds of gestures, left swipe, right swipe, and double throw,” says Rohit. “Gestures were correctly recognized in more than 80% of the cases.”

The video of the project can be viewed here.

We need your signal

Having read about these projects, you may wish to view their video demonstrations. I am keen to know your feedback and your thoughts, not only on the end applications of these projects but also on their educational value in a classroom/lab. A well written feedback, posted as a comment, will be a likely winner of a surprise gift from Texas Instruments.