Can you make it cheaper in price? How often have you been asked this question? In my case, almost always!

In this blog I’ll share my idea for making “it” cheaper in the context of sensor signal conditioners. Before I describe my idea, let me introduce a few things.

Sensor: A sensor is a device that is used to measure a physical quantity of interest. A typical sensor consists of a sense element and a sensor signal conditioner. The sense element converts the physical quantity of interest into electrical signals. However, the electrical signal produced by the sense element is just bad. The signals are small in magnitude they are nonlinear in response and the response for a given stimulus varies with temperature.

And this is where the sensor signal conditioner comes to the rescue because it makes the bad signal from the sense element good for use by control and monitoring system. Figure 1 shows a representation of a sensor.

Figure 1: A typical sensor comprises of a sense element and a sensor signal conditioner

Mixed-signal conditioning: In the context of integrated circuits (ICs), there are many compelling architectures to implement sensor signal conditioners. But, with the advent of advanced mixed-signal IC fabrication processes, mixed-signal techniques are garnering more interest for conditioning sense element outputs.

In these signal conditioners, signal conditioning occurs partly in analog domain and partly in digital domain. The PGA400 is a good example of a device that implements mixed-signal techniques to condition a sense element output is it conditions the output of pressure sense elements.

Multi-modal signal conditioning: When I say multi-modal, all I mean is that a single sensor signal conditioner could process outputs of multiple sense elements. For example, a pressure sensor signal conditioner not only has to measure the output of the pressure sense element, but also has to measure output of a temperature sensor. It has to measure temperature sensor output in order to compensate for the temperature dependence of the pressure sense element output.

Nyquist: A key functional block in mixed-signal sensor signal conditioners is the analog to digital converter (ADC). The ADC is used to discretize the signal in analog domain as well as time domain. In other words, mixed-signal conditioners are sampled systems. So you have to use anti-alias filters before ADCs to limit the highest frequency signal, or to discard signal content that is out-of-frequency band of interest.

Now that I’ve introduced the context of this article, I will show a common multi-modal, mixed-signal sensor signal conditioner architecture in Figure 2. As you can see, this architecture uses two ADCs, one for each sense element output. I will assume that each amplifier in the figure includes the necessary anti-alias characteristics.

Figure 2: Processing output of 2 sense elements using independent ADCs

How can I make the architecture in Figure 2 cheaper? Many ways you say – make the amplifier smaller, make the ADC with lower resolution, etc, etc. But, the idea I want to propose is to share the amplifier and ADC as shown in Figure 3. Ah, you yell out Nyquist! You tell me that multiplexing causes Nyquist to be disobeyed. And you reiterate to me that the problem becomes worse if the ADC is a sigma-delta architecture because unsettled samples after channel switching have to be discarded. That is, multiplexing and sample discarding reduces the effective sample rate.

Figure 3: Processing output of 2 sense elements using a common ADC

Yes, we have to always respect Nyquist. But, here are the conditions under which the architecture in Figure 3 is viable:

- The out-of-frequency band-of-interest signal after undersampling is only band-limited white noise.

- The signal-to-noise ratio before sampling meets the required value.

- The time-discretized (or sampled) signal is not filtered further in the digital domain.

Here is an example to explain why. Consider white noise that is band-limited to frequency fB and has noise density of n V/√Hz. The root-mean-square (RMS) value of the noiseis n*√fB.

If this noise is sampled at a sampling frequency of fs without an anti-alias filter, i.e. without a filter that restricts the signal band to fs/2, all the noise is “constrained” to within fs/2. Hence, the noise density of the sampled noise is n*√fB/√fs/2.

However, the RMS value of the signal is still n*√fB. In other words, the RMS value of the noise has not changed because of sampling. If the signal frequency is less than fs/2, the signal to noise value is not affected by sampling. That is, Figure 3 works!

If you have other ideas on how to make signal conditioner architectures cheaper, I would love to hear from you!!

The 94Fifty also features TI wireless charging technology in the basketball (the

The 94Fifty also features TI wireless charging technology in the basketball (the

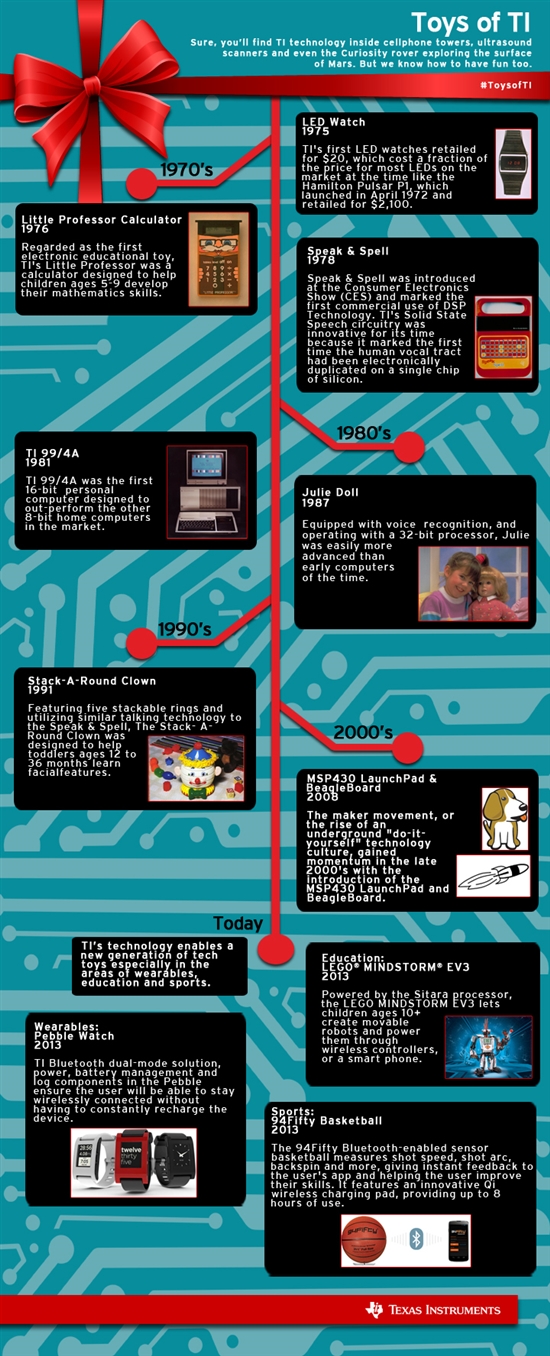

While TI has introduced many toys for kids, we couldn’t ignore those that are kids at heart. What about something for the rest of us to be filled with awe? In 1975, TI created an awe inspiring LED watch. Introduced at CES in Chicago, the TI LED watch was the first electronic watch using light emitting diodes (LEDs). Retailing for just $20, TI’s model offered new technology at a much lower price, giving consumers access to technology like they have never had before.

While TI has introduced many toys for kids, we couldn’t ignore those that are kids at heart. What about something for the rest of us to be filled with awe? In 1975, TI created an awe inspiring LED watch. Introduced at CES in Chicago, the TI LED watch was the first electronic watch using light emitting diodes (LEDs). Retailing for just $20, TI’s model offered new technology at a much lower price, giving consumers access to technology like they have never had before.