Guest blog by Warwick Taws, chief technology officer, Wittra

Most of us can see the “Internet of Things (IoT) revolution” coming. It’s a fragmented landscape, with no dominant standard in any part of the technology chain. This can make it difficult to find where each of us fit into the overall ecosystem – how can we monetize our brilliant IoT idea? At Wittra, we see a differentiation opportunity in the “Internet of Moving Things”… being able to manage moving things (objects, people and animals) by knowing their position and activity. To get a better understanding of our company and our products, we’ve answered a few questions below.

1. What is Wittra?

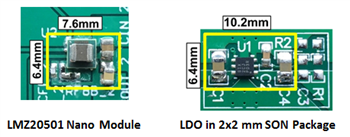

Wittra provides the world’s smallest, long-range mobile asset tag. This is paired with a fixed beacon (base station). Depending on your view of the world, we can be called an RFID, RTLS or IoT vendor. Our unique difference is our ability to perform distance ranging over our data link, at long range (kilometers), with low-power consumption. So we can triangulate the position of the tag if we have more than three available base stations, and operate both outdoors and indoors with a single product. We have a GPS chip in our tag, but we only use it if we can’t compute an accurate position from our network. In this way, we can offer months of battery life on a single charge.

Our customers are integrating this technology into products in diverse applications such as maritime safety for boat crews and passengers, pet lifestyle and activity monitoring, elderly care in the home (remote activity monitoring), vehicle security and tracking, race horse welfare and health monitoring, workflow management for trains in the shunting yard and workshops, shipping container tracking and management, warehouse/inventory logistics (pallet tracking indoors/outdoors), to name a few. We are seeing new applications every week and replacing existing technology solutions that have too many limitations to be effective.

2. What makes Wittra stand out from its competitors?

We have recently won a number of positioning system deals where we beat the closest competitor on capital equipment cost by a factor of 10 – 20 times. This is a good example of what low cost, high performance silicon allows us to do. So cost is one area. Another is range – Wi-Fi® and Bluetooth® positioning systems measure distance in tens of meters (or perhaps a hundred meters)… we use kilometers as our baseline. GPS based asset trackers provide a few days of battery life – we measure battery endurance in months.

Our business customers are using this technology to provide their end users with new features and greatly improved product performance in many different market verticals.

3. There are many wireless connectivity technologies on the market. Why did you choose to integrate Sub-1 GHz and Bluetooth low energy technology in Wittra?

Only Sub-1 GHz technology can provide us the unique advantage of long communication range and low power. You can’t ignore the laws of physics! Bluetooth low energy provides a complementary way to ensure seamless compatibility with millions of existing wireless devices already on the market, again using low power. Low power is the Holy Grail of small, battery operated end devices, because consumers don’t want the inconvenience of frequent battery charging.

4. Why did you choose TI’s Sub-1 GHz and Bluetooth low energy connectivity technology for your product?

Of all the technology vendors, we saw that TI stood out in terms of RF performance, commitment to continued innovation, had a history of quality and consistency in delivery of functional silicon, and a willingness to support our product vision. We chose the SimpleLink™ Sub-1 GHz CC1310 and Bluetooth low energy CC2640 wireless MCUs to design into our product for those reasons.

5. Where do you see your technology/solution going in the next five years?

In order to maintain our unique market advantages, we need to remain focused on innovation. We will make our end devices smaller, cheaper and more power efficient over time. This will enable us to enter more market verticals and provide our end users with a more compelling user experience and a better value proposition. Our fixed infrastructure beacons will become better at locating the end devices through more flexible radio architectures with more processing power and increased performance.

For more information, visit:

- SimpleLink Sub-1 GHz CC1310 wireless MCU

- SimpleLink Bluetooth low energy CC2640 wireless MCU

- Wittra: www.wittra.se