It’ s been a while since posting entries 1 through 5 in this series, but I find myself still fielding several questions about FET datasheets, particularly those parameters found in the thermal information table. That’s why today, I want to address the data-sheet parameters of junction-to-ambient thermal impedance and junction-to-case thermal impedance that seem to be the cause of much confusion.

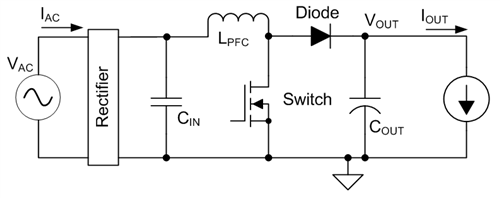

First, let’s define exactly what these parameters mean. When it comes to thermal impedance, it’s hard to find consistency in the nomenclature of these parameters within the FET industry – sometimes even within the same company. For the sake of this post, I will use the parameters defined in Figure 1 and Table 1. If you think of heat flow as analogous to current, it’s easy to envision the resistance network by which the heat can dissipate from the junction or die shown in Figure 1. The sum of this network is what we call the junction-to-ambient thermal impedance (RθJA) of the device.

Described mathematically by Equation 1, RθJA is the parallel summation of impedance through the top of the package to the ambient environment and through the bottom of the package, then through the printed circuit board (PCB):

Of the four parameters that sum up to RθJA, the FET itself dictates only two: RθJB and RθJT. Because in practice it is much easier to dissipate heat through the PCB, RθJB + RθBA is usually much smaller than RθJT + RθTA, and you can neglect the latter term in Equation 1. (This is may not be the case if the device has DualCool™ packaging or an exposed metal top. Typical RθJT for a standard 5mm-by-6mm quad flat no-lead (QFN) package is on the order of 12-15˚C/W, but you can reduce it to 2-3˚C/W with an exposed metal top and techniques that put the silicon die closer to the top of the package. All of this is for naught, however, unless you employ some technique to reduce RθTA, such as applying a heat sink to the device or administering airflow.)

When FET vendors discuss junction-to-case thermal impedance (RθJC)in the data sheet, while technically they could be referring to RθJB or RθJT, you can usually assume that they are talking about RθJB.

Figure 1: Resistance network between the silicon junction and ambient environment

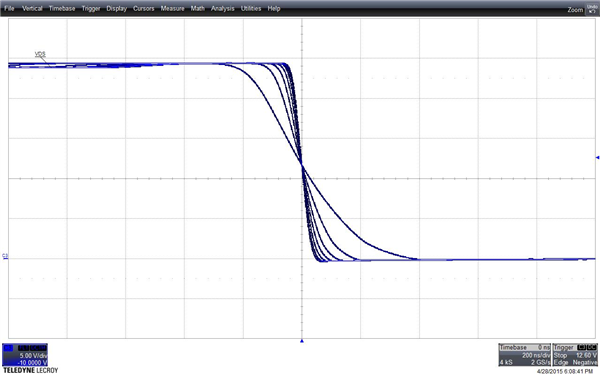

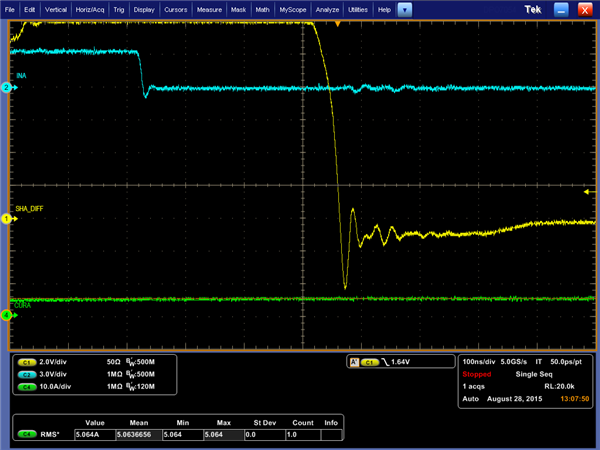

Because RθBA is completely dependent on board conditions (PCB size, copper thickness, number of layers) it is impossible to know the total RθJA without knowing RθBA as well. Regardless, RθBA will be the dominant impedance dictating RθJA. In practical applications, it can be as high as 40˚C/W, all the way down to ~10˚C/W for well-designed systems. FET vendors can only guarantee RθJC, but typically, they do provide some RθJA for worst-case scenarios. For example, transistor outline (TO)-220 or TO-263 (D2PAK) data sheets list the measured RθJA with the device suspended in air (see Figure 2). QFN devices, on the other hand, are measured on 1-inch copper and min Cu minimum copper board (see Figure 3). The maximum values provided in the data sheet and shown in Figure 3 are 25% above those values measured in characterization. Because they are almost entirely dependent on the package’s interaction with the surrounding board, and less on die size or thermal mechanics inside the device, they are more or less industry standards for a given package.

Figure 2: TO-220 device suspended in the air for RθJA measurement

Figure 3: Small outline no-lead (SON) 5mm-by-6mm RθJA measurements as they appear in the device data sheet

I could write another 13 pages elaborating on these values, but since Darvin Edwards beat me to the punch with an excellent application note, I’ll just redirect you there.

Also, please check out Manu Balakrishnan’s similar breakdown of these thermal parameters (part 1 and part 2), particularly regarding how they pertain to selecting the right FETs for power tools where thermal performance is critical.

I think this should be the final entry of this series, which I never anticipated would grow to six installments. But hey, that’s what spinoffs are for, right? Please join me next month, when I will discuss MOSFET selection methods for a wide array of applications. In the meantime, consider one of TI’s MOSFETs for your next design.