Vehicle manufacturers continue to make infotainment systems an extension of the multimedia experience. The USB interface has been a fundamental element of the infotainment architecture, and thus manufacturers have subjected this originally consumer-focused interface to significantly more stringent protection requirements. Among these is the need to protect against shorts to the vehicle battery that can occur during assembly, manufacturing or maintenance. For example, a damaged long-wire harness connecting the head unit to different connectivity modules can short all pins to the main 12V car battery. Other potential failure mechanisms include the use of noncompliant adapters, cables or chargers; mechanical twists of USB connectors or cables; or any sort of debris getting into the connector and shorting the data lines to VBUS.

In part one of this two-part series, I will illustrate the best way to protect USB circuits from short-to-battery faults. In my next post, I will expand on the best way to optimize your automotive USB short-to-battery design.

When designing for USB short-to-battery protection, always keep in mind three major areas:

- The bandwidth of the protection solution.

- The clamping voltage and response-time behavior.

- The overcurrent and short-to-ground characteristics.

In the past, it wasn’t possible to find a USB 2.0 short-to-battery solution that could address all three areas, but TI’s new TPD3S714-Q1 family of short-to-battery protection devices can help solve these common issues.

Bandwidth

Signal integrity is one of the biggest challenges for design engineers working in automotive USB applications. Since USB 2.0 supports data rates up to 480Mbps, any small amount of capacitance added to the lines can distort the signal and cause failures in data transmission. Designers are left with the complicated task of finding a solution that will protect sensitive electronics against high voltage and current spikes, while maintaining optimal signal integrity.

The TPD3S714-Q1 is a single-chip solution for short-to-battery, short-circuit and electrostatic discharge (ESD) protection for the USB connector’s VBUS and data lines. The integrated data switches provide two times higher bandwidth for minimal signal degradation while simultaneously providing up to 18V short-to-battery protection. Figure 1 is an insertion-loss diagram highlighting the high-speed data switches with 1GHz -3dB bandwidth.

Figure 1: TPD3S714-Q1 data switch differential bandwidth

You can use eye diagrams to analyze line-capacitance effects on bandwidth. Measuring the minimum and maximum voltage levels as well as jitter makes it possible to expose any issues in USB data-line transmissions. The high 1GHz bandwidth allows for USB 2.0 high-speed applications. Extra margin in bandwidth above 720MHz also helps maintain a clean USB 2.0 eye diagram with the long captive cables common in automotive USB environments. Figure 2 is an example USB 2.0 eye diagram.

Figure 2: USB 2.0 eye diagram with the TPD3S714-Q1

Clamping voltage and response time

Even though bandwidth is one of the most important characteristics to keep in mind when selecting a protection solution, you also have to ensure that the clamping voltage is low enough to protect the downstream circuitry from any short-to-battery or ESD event. Furthermore, you should design the overvoltage field-effect transistors (FETs) to have a fast turn-off time in order to protect the upstream system-on-chip (SoC) from harmful voltage and current spikes as quickly as possible.

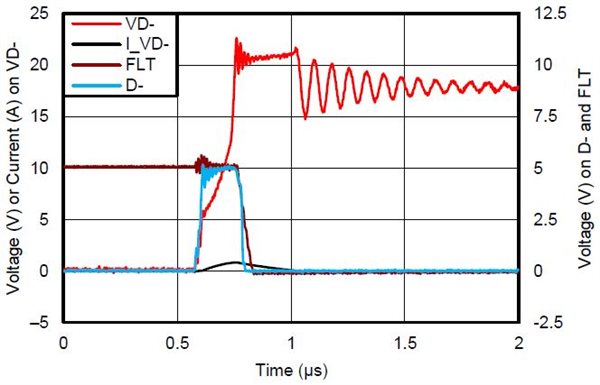

The short-to-battery protection isolates the internal system circuits from any overvoltage conditions at the VBUS, D+ and D- pins. On these pins, the TPD3S714-Q1 can handle overvoltages up to 18V for hot-plug and DC events. The overvoltage-protection circuit provides the most reliable short-to-battery isolation in the industry, helping improve system-level protection. Figure 3 shows its 5V clamping voltage during a short-to-18V fault, highlighting the ultra-fast response time of 200ns on the data path.

Figure 3: TPD3S714-Q1 data switch short-to-18V response waveform

Overcurrent and short-to-ground

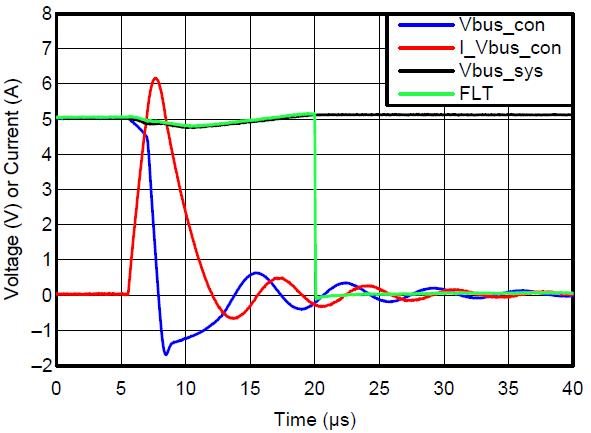

Selecting a poor overcurrent-protection circuit can become a roadblock for faster time to market. Substantial amounts of current flowing through the system side during overcurrent events could cause a brownout or blackout to the upstream 5V rail and potentially bring down or reset multiple integrated circuits (ICs) connected to the shared rail. The purpose of an overcurrent-protection device is to limit the amount of current a USB port can draw, such as in a short-to-ground scenario. Furthermore, the USB 2.0 specification requires the use of an overcurrent-protection device in any USB Power Delivery design.

Figure 4 illustrates a short-to-ground event where the system voltage drops by less than 200mV, keeping the shared 5V rail stable and properly isolated from faults. The TPD3S714-Q1 integrates an accurate current-limit load switch up to 0.5A, automatically limiting current during an overcurrent event. The internal FET switch prevents excess current from flowing through the upstream device, keeping the system side from resetting.

Figure 4: TPD3S714-Q1VBUS short-to-ground response waveform

Remember – when looking for a USB 2.0 short-to-battery solution, always keep in mind the bandwidth of the protection device, the clamping voltage and response time, and the overcurrent and short-to-ground characteristics. Considering these key areas makes implementations easier and reduces time to market for original equipment manufacturers (OEMs).

Subscribe to Behind the Wheel to receive an email notification upon the publication of the second post.

Additional resources

- Check out the TI Designs Two-Port Automotive USB 2.0 Hub with Short-to-Battery Protection Reference Design.

- Read the white paper, “Designing USB for short-to-battery tolerance in automotive environments.”

- Check out the video, “Introducing the TPD3S714-Q1 for automotive USB short-to-battery.”

- Evaluate your design with the TPD3S714-Q1 evaluation module for automotive USB 2.0 short-to-battery protection.

Figure 1: Aircraft slats and flaps

Figure 1: Aircraft slats and flaps