Wireless earbuds have infiltrated the electronics market in recent years. Users can now walk away from their streaming devices without the fear of being yanked back by a caught wire. True wireless earbuds are Bluetooth®-based wireless earbuds that have their left and right channels separated into individual housings. And while this innovation has freed consumers from having to be connected to their phones by a wire, it has also presented a host of new design challenges for earbud manufacturers.

To maximize battery life and support long battery runtime, it’s important to ensure an efficient charge with the earbuds seated properly in their charging case. Magnetic sensors helpensure proper earbud seating because they use magnets to detect fine object movements. Commonly, using current-sense amplifiers for earbud charging and Hall-effect switches for wireless charging cases support maximium battery charge and battery life for these applications.

Designing with current-sense amplifiers

The batteries in wireless earbuds are often in the sub 100-mAh range. Therefore, better current measurement is necessary in order to protect and accurately charge these smaller-capacity cells. Traditional battery chargers and gauges do an excellent job of monitoring larger currents for batteries like those in a charging case, but often do not fare well at super-low currents.

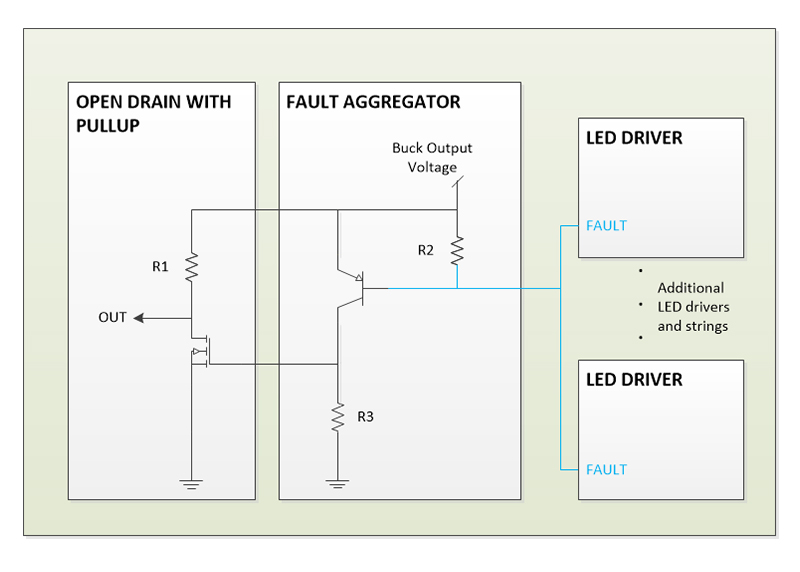

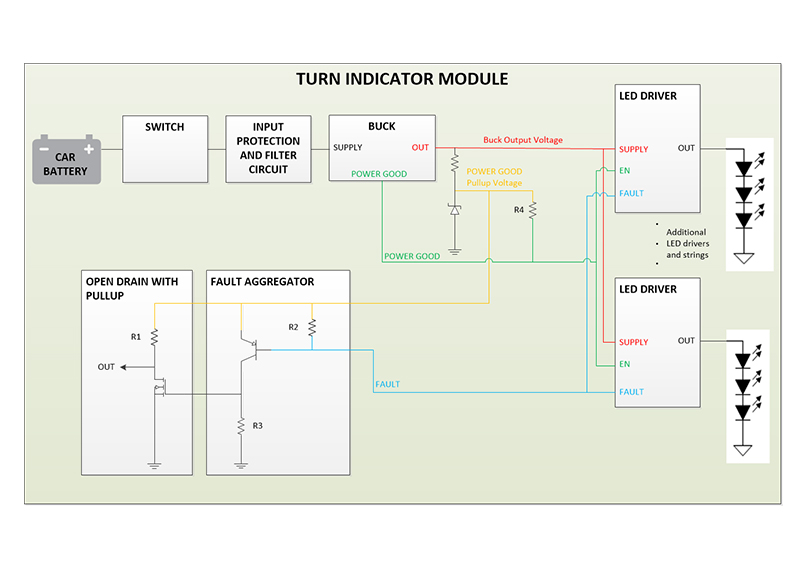

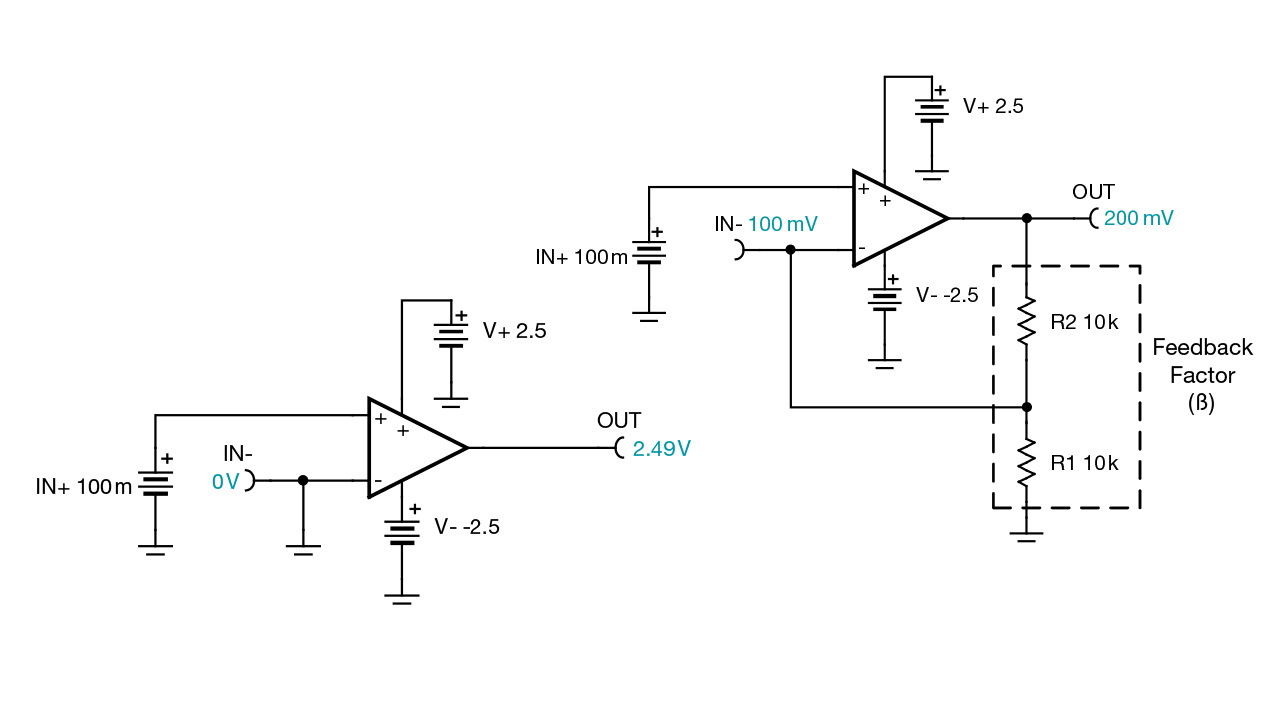

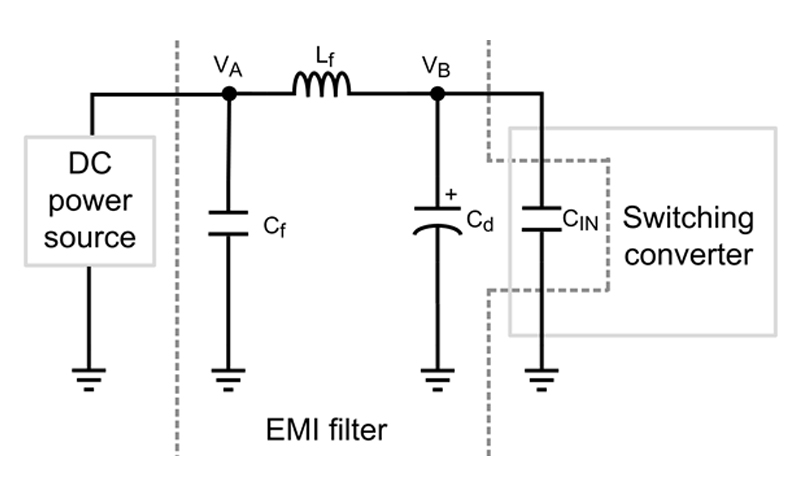

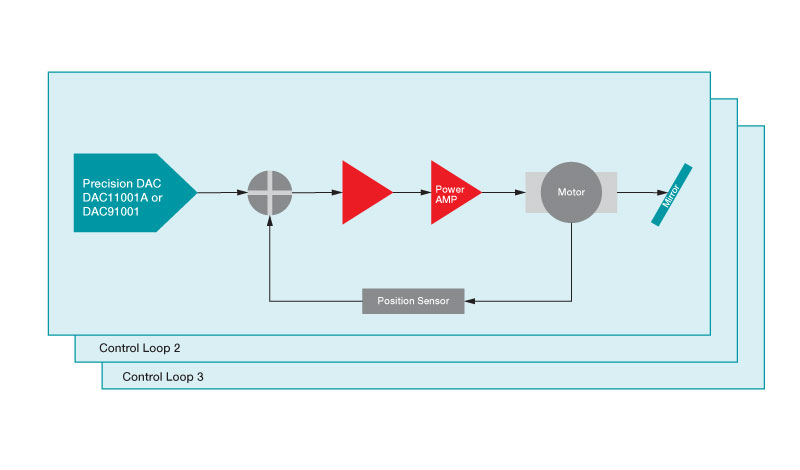

Dedicated current-sense amplifiers are more accurate when measuring small currents. If you already have a microcontroller (MCU) or power-management integrated circuit (PMIC) in your design, you can use the output of these amplifiers to monitor and gauge battery use times and lifetimes based on algorithms written in the MCU or PMIC.Figure 1 shows a battery fuel gauge with an external current-sense amplifier and controller.

Figure 1: Battery fuel gauging with an external current-sense amplifier and controller

Placing two small-size current-sense amplifiers like the INA216 in a wireless earbud charging case will enable highly accurate charging current measurements. Alternatively, if solution size is a priority, using a single dual-channel-capable current-sense amplifier like the INA2180 is recommended.

If accuracy is less important, and assuming an equal current division, one current sensor can monitor charging in both earbuds. Placing bidirectional-capable current-sense amplifiers like the INA191 or INA210 in the earbuds themselves will enable both charging and gauging functionalities. Regardless of which topology you choose, these devices can also enable better battery protection, as even small changes in current can affect battery lifetimes.

Designing with Hall-effect sensors

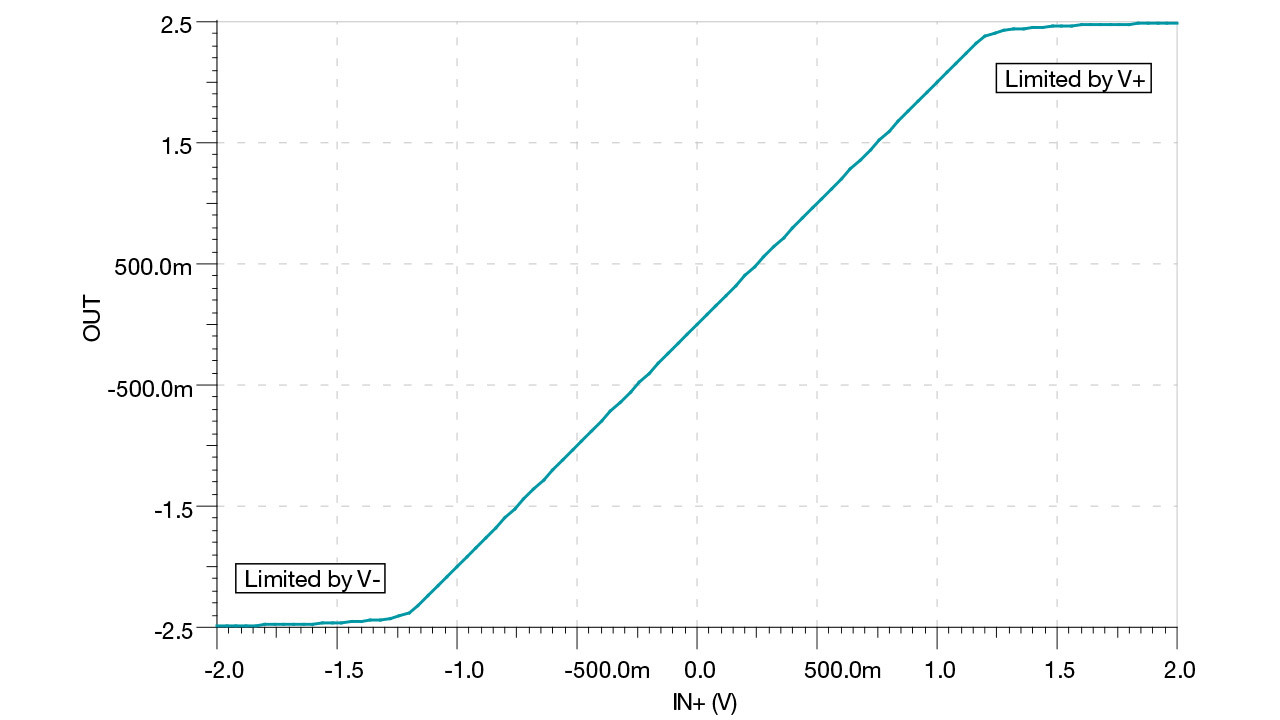

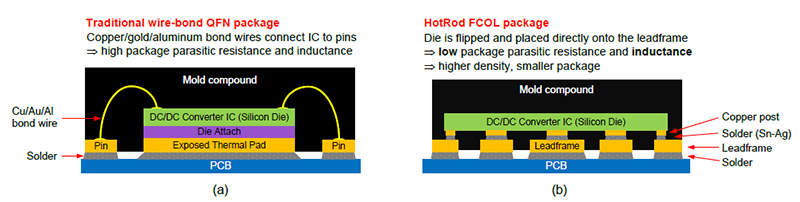

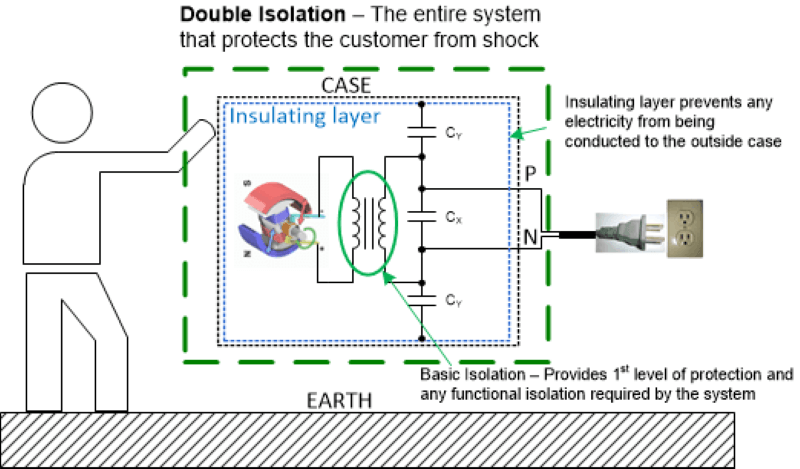

A new feature of wireless earbuds is lid and charge detection within their companion charging cases. Charging cases must be able to detect the position of the lid and be able to detect the presence of the earbuds inside the case for charging. Other sensor technologies may not have the ability or sensitivity to discern these things correctly in a cost-effective manner, so choosing the right sensor is crucial. Figure 2 shows wireless earbud sensor placement.

Figure 2: Wireless earbud sensor placement and use

Hall-effect sensors work well for charging case lid and earbud charge detection. Magnets are already used to clasp charging case lids shut, so using a magnetic sensing solution for lid detection in the form of Hall-effect switches is an obvious solution that requires no extra parts. In addition, placing magnets in the earbuds themselves enables a robust means of detecting whether the earbuds are present inside their charging case. Knowing whether the earbuds are in or out of the case will allow Bluetooth auto-connect when the earbuds are removed, or charge detection when they are inside the case.

Choosing the right digital Hall-effect sensor is important, and features such as low frequency and low power make the DRV5032 a good fit. For Hall-effect sensor applications in earbuds, providing magnet detection information five times per second is more than enough. This frequency allows you to use the DRV5032’s low-power option, which consumes only about 0.5 µA and does not place a significant drain on the device’s battery.

Determining state of charge and charging case lid detection are both critically important to earbuds with their small-capacity batteries and wireless connectivity. Current-sense amplifiers and Hall-effect sensors provide a solution for those struggling to design around these new features and challenges.

Additional resources

- Read the technical article, “Overcoming design challenges for low quiescent current in small, battery-powered devices.”

- Watch TI Precision Labs educational videos on current-sense amplifiers and magnetic sensors.

- Check out the current-sense amplifier and magnetic sensor technology overview pages.